Hume's empiricism vs. AI knowledge

Recently, OpenAI CEO Sam Altman stated that his child will never be smarter than an AI. This bold claim is backed by the rapid developments in artificial intelligence, which have led many to believe that machines will soon surpass human intelligence. Sam's statement raises some questions about the nature of knowledge and intelligence that are important for us, users of AI, to consider.

The theory of knowledge

To be smart is, as Aristotle put it, to possess phronesis, or practical wisdom. This means having the ability to make good judgements by applying one's knowledge to real-world situations.

So, we can say that Sam's claim is an epistemological one; that is, it concerns the nature of knowledge.

Philosophers have been debating knowledge ever since Socrates, who famously said that, although wise, he knew nothing. In our time, one of the most influential epistemological views is that of empiricism, which, according to David Hume, asserts that all knowledge comes from sensory data.

Hume argued that our minds are like a blank slate, and that we gain knowledge through experience. This means that we can only know what we can see, hear, touch, taste, or smell. Anything beyond that is mere speculation. Of course, this came as a direct counter to both rationalism (the belief that knowledge comes from reason alone) and medieval scholasticism (the idea that knowledge comes from senses and metaphysical, divine truths).

More specifically, Hume called the impression the direct sensory experience, and the idea the mental representation of that experience. For example, when we see a tree, the impression is the actual sight of the tree, while the idea is our mental image of it. Without impressions, we cannot form ideas, according to him.

How do AIs learn?

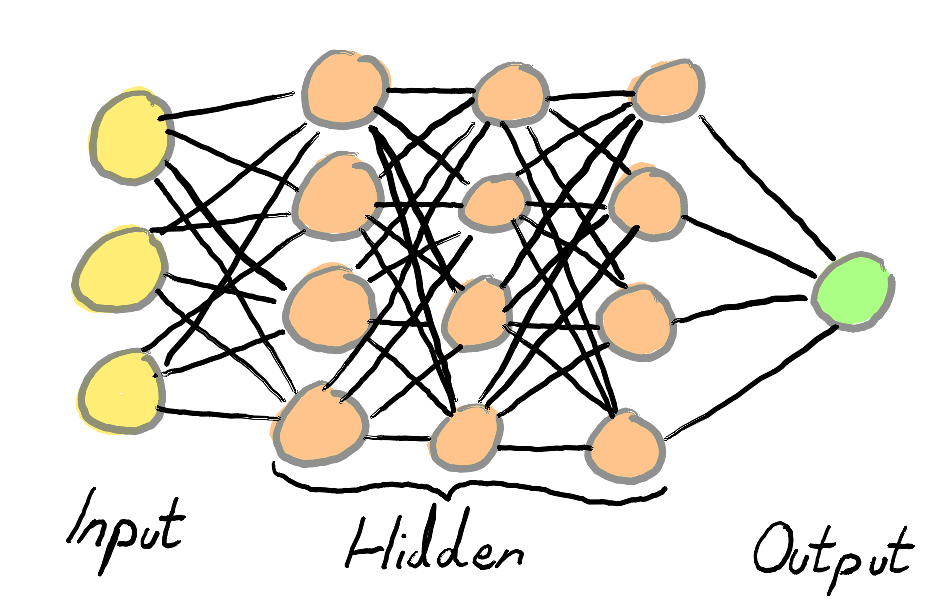

Neural Networks have quite the catchy name. They are inspired by the human brain, which is made up of billions of neurons that communicate with each other through electrical and chemical signals. However, this does not mean that neural networks are equivalent to human brains. Under the hood, a neuron is a rather simple mathematical function that takes in inputs, applies weights and biases, and produces an output. Nevertheless, all modern AI models, including LLMs such as ChatGPT, are based on this principle.

What does it mean to apply weights and biases? It is the way a neural network learns from data. During training, the network has one simple goal: to minimize the error between its predictions and the correct answers. It adjusts the weights and biases of its neurons to achieve this goal, in a process called backpropagation.

So, the model itself is not "learning" at all. It is simply adjusting numbers to fit a pattern. It does, by definition, not understand the data it is processing. It is merely a mathematical function that maps inputs to outputs. This means that it skips the entire Humean process of forming impressions and ideas. It does not "know" anything.

Imagine that we are using a neural network to classify black and white images of cats and dogs. The input layer reads the color intensity at each pixel, which is a number between 0 (black) and 255 (white). These inputs are then passed through several hidden layers, where each neuron applies weights and biases to the inputs in order to reduce the error in its predictions. Finally, the output layer tells us whether the image is more likely to be a cat or a dog.

In this example, the neural network is not actually seeing, nor interpreting the images in the way that humans do. Matter of fact, it does not even receive the image in 2D, but in a flattened one dimension that would be unintelligible to us. It never understands what a cat or a dog is, but only learns to associate pixel values.

Empiricism in academia

The leading philosophy of AI research today (and any science, really) is still empiricism. This is not surprising, given that the scientific method itself is based on observation and experimentation. However, this also means that we are limited by what we can observe and measure, negating all metaphysical considerations.

We can construct a syllogism from this:

- Humean empiricism states that all knowledge comes from sensory data (impressions).

- AIs have no impressions, but only try to minimize error on pre-encoded data.

- Therefore, AIs cannot possess knowledge.

In no way does this mean that AIs are useless. On the contrary, understanding this fact allows us to better utilize them as the tools they are. They can process an incredible amount of data, and find patterns that would be impossible for humans to detect.

But, let us not forget that AI is just that: a tool. It does not possess knowledge. It is not, and cannot ever be, smarter than us.

Matei Cananau

MSc Machine Learning student writing on AI, philosophy, and technology that serves the human person.